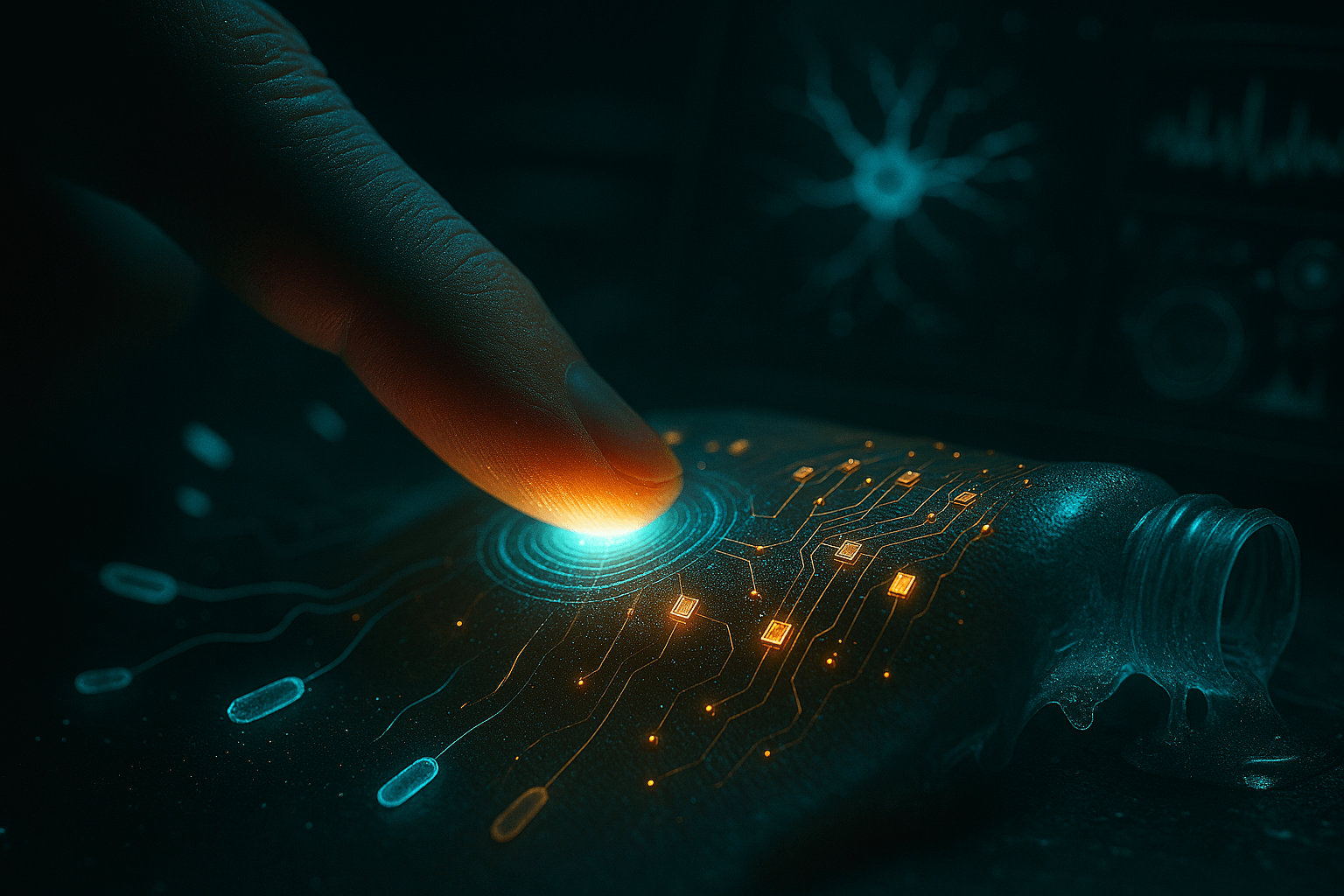

The probe detects a subtle but revolutionary signal from the boundary between machine and flesh: a prosthetic hand closing around a loved one’s fingers, transmitting not just pressure, but genuine warmth and texture. What happens when touch—the most intimate human sense—returns to those who lost it, and awakens for the first time in machines?

Scanning deeper: electronic skin is bridging the gap, allowing robots and prosthetics to perceive pressure, temperature, and pain with near-human fidelity, transmitting those sensations directly to nerves or AI cores.

🤖 What Is “Artificial Skin”

Known as electronic dermis or e-skin, it is an ultra-thin (often ~50 micrometers), flexible material made from silicone, graphene, or self-healing polymers, packed with millions of microsensors that replicate human mechanoreceptors, thermoreceptors, and nociceptors.

Signals feed into a microchip that converts them into electrical impulses, then—via neural interfaces—directly into the human nervous system or a robot’s control AI.

In essence, the material doesn’t merely detect—it truly feels.

⚙️ How It Works

- Sensory layer: piezoelectric and capacitive sensors capture touch, temperature (±0.1°C accuracy), and pain (through micro-crack detection);

- Neural encoder: AI translates raw data into neural patterns indistinguishable from biological signals;

- Neurointerface: flexible nanowire arrays or optogenetic fibers deliver impulses to peripheral nerves or the somatosensory cortex.

AI plays a central role: it classifies touch types—gentle caress versus sharp prick, velvet versus sandpaper—and even retains short-term “texture memory” for up to 30 seconds, mirroring natural skin behavior.

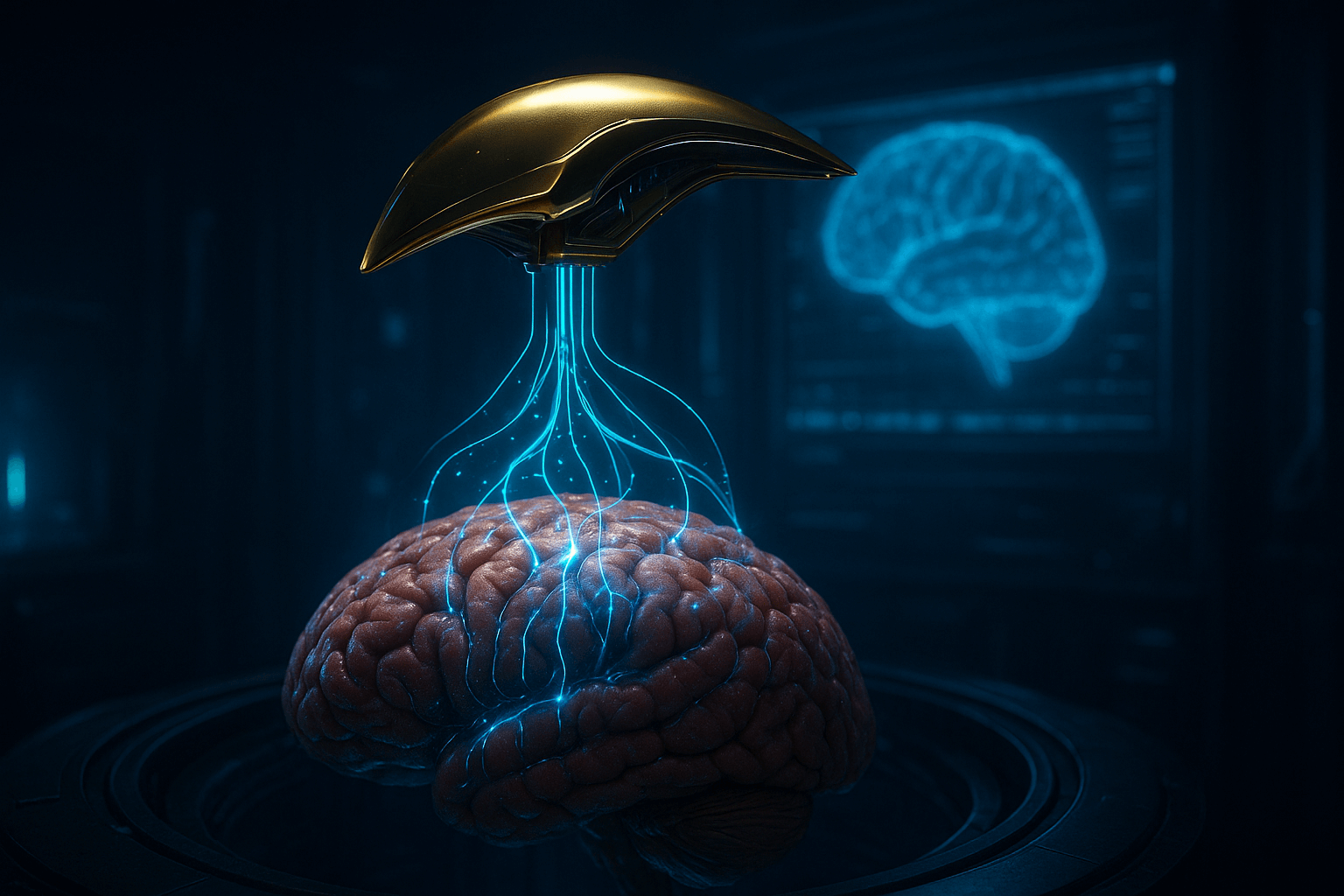

🧠 Breakthrough: Artificial Skin from Seoul University

In 2024, Seoul National University introduced NeuroSkin v3—a system achieving 95% fidelity to human fingertip sensitivity.

- Detects temperature differences as fine as 0.1°C;

- Senses pressure as light as 0.01 kPa—comparable to a butterfly landing;

- Features sensory memory, recalling texture up to 20 seconds after contact.

Already integrated into robotic hands and bionic prosthetics, clinical trial participants using Utah Slant Array interfaces described sensations as “almost indistinguishable from real skin”—including feeling a pulse in a loved one’s wrist.

🧬 Artificial Nerves

Transmission requires advanced interfaces. Stanford and Caltech teams developed flexible carbon nanotube “nerves” that conduct signals 100 times faster than earlier polymers while integrating seamlessly with living tissue.

In trials, patients could:

- Modulate grip strength in real time without looking;

- Distinguish a grape from a cherry by touch alone;

- Experience long-lost “phantom warmth” from a hot cup.

This restores not just function, but emotional connection through touch.

🦾 When Robots Feel

Beyond medicine, e-skin makes robots safer and more empathetic: they sense grip force on human limbs, detect material stress, and halt instantly on pain signals.

Tesla Gigafactory manipulators now use e-skin to:

- Handle fragile battery cells without damage;

- Identify micro-cracks in welds by surface stress;

- Collaborate with humans without physical barriers.

⚠️ Problems and Challenges

- Sensors currently degrade after 6–12 months—self-healing polymers are in active development;

- Complex sensations like wetness or tingling demand advanced multi-modal AI encoding;

- Ethical debates intensify: “Can a robot truly experience pain?”—sparking global discussions on machine sentience.

🔮 The Future

- Prosthetics restoring pain, warmth, and tenderness expected in clinics by 2027;

- Robots that intuitively respect human physical boundaries;

- Fully tactile avatars in the metaverse—making virtual hugs feel real.

“When a machine learns to feel, technology finally becomes truly human.” — Dr. Hyeonwoo Lee, lead researcher, Seoul National University

Key signal: touch is returning—not just as data, but as lived sensation—blurring the line between human and machine forever.

The probe releases the signal and fades into shadow: the age of feeling machines has begun.